AI makes us less human

AI systems take away opportunities for us to do what we do best: listen, empathize, and make caring, thoughtful decisions.

The narrative

The next time you hear a tech CEO or a politician saying that AI will allow us to do all sorts of amazing things, that it represents a 'fourth industrial revolution', that it allows us to streamline and optimize government operations, or that it's going to transform the delivery of medical care - and therefore we need to devote huge amounts of public money and resources to it - ask yourself this: where's the evidence to support this?

Major tech companies have already poured several hundred billion dollars into buying chips, building data centres, burning electricity and draining freshwater resources. Has your life improved in any meaningful or transformative way?

What is AI good at?

What is AI good at? Well, computers tend to be a good choice to perform tasks that can need a lot of calculation, but that don't require any understanding of the world. AI is no different. Some AI systems are built for those types of applications; AlphaFold, for example, models the folded shapes of proteins, produces quite accurate results, and may prove to be very useful for medical research.

DeepMind, the group that developed AlphaFold, has also built systems that try to tackle very different kinds of problems. One of these is AlphaGo, which plays Go, a 2500-year old game. The rules of the game are simple, but the game is difficult to master - and Go's large 19x19 board (compare that to an 8x8 chess board) means there are a lot more possible moves that a computer has to consider.

And sure enough, it wasn't until 2016 that the most advanced Go-playing computers began consistently winning against the world's top-ranked human players. By that point, Chess computers had already been beating world champion grandmasters for a couple decades. Getting to this point with Go was seen as a major accomplishment.

Since then, presumably, Go-playing computer systems have only become more dominant ... right?

“As a human it would be quite easy to spot" - Kellin Pelrine

As it turns out, even these computational juggernauts have glaring weaknesses.

Go is played by taking turns placing stones on the board's 19x19 grid, and attempting to surround groups of the opposing player's stones, in order to remove them and capture territory.

So you'd think that an AI system like KataGo (widely considered to be on par with the AlphaGo system that beat top-ranked Lee Sedol in 2016) would be able to spot its opponent doing something really obvious like, say, placing their stones on the board in a big loop around the computer's pieces.

But this pretty much describes how Kellin Pelrine, a highly-ranked amateur Go player and professional AI researcher, beat KataGo in 2023.

The victory wasn't a one-time fluke, either: Pelrine won 14 out of 15 games against KataGo using the same strategy. Which makes it very clear that no matter how clever these AI systems might sometimes appear to be, they don't actually 'know' or 'understand' anything. When it comes down to it, they're still just calculators.

To expand on this: an AI system might be capable of doing a specific task fairly reliably, but what it can't do is generalize its problem-solving approach and apply it to different problems. In fact, you could argue it's even worse than that: as Pelrine's victory showed, these AI systems don't have what we'd call 'common sense' or 'insight' even when you're only looking at the extremely narrow task the AI is specifically built to perform.

Unthinking, unfeeling

This lack of common sense can have consequences that are a lot worse than a string of losses in a board game.

In the Netherlands, for example, an algorithmic system incorrectly flagged thousands of Dutch families as having fraudulently received child welfare benefits. Even something like forgetting a signature on a document, or having dual citizenship, could result in a family being flagged as fraudsters, and being forced to pay back tens of thousands of euros they had (legitimately!) received over the course of years.

These mistakes destroyed lives. Families were torn apart, people lost their homes, many spiraled into depression. These people were the casualties of a push for 'government efficiency' at the expense of empathy, care, and basic common sense & decency.

Automation bias

Ok sure, you might say, algorithms don't have common sense or empathy - but what if there'd been a human being double-checking the system's outputs to make sure it wasn't making tragic mistakes?

Well, we happen to have scientific studies and real-life examples of AI deployments where there were humans in the loop, monitoring and double-checking the systems' outputs, but unfortunately, it doesn't seem to have helped a whole lot.

In one scientific study, titled Automation Bias in Mammography, researchers rigged an AI to occasionally mis-categorize a mammogram. The AI would show its assessments to professional radiologists, after which the researchers asked the radiologists to categorize the mammogram. It turned out that it didn't matter whether a radiologist was fresh out of college or had decades of experience: the accuracy of their diagnoses dropped dramatically in the cases where the AI was wrong.

The explanation? Human beings have a tendency to over-value decisions made by automated systems, to the point where we'll stop trusting our own skills and knowledge, and defer to the machine.

Luckily, those misdiagnoses happened in a controlled setting, and no patients' lives were affected. But there are plenty of examples of automation bias leading to significant real-world harm, too.

For instance, there are multiple cases where "100% match" results from facial recognition technology (archive link) have led U.S. police to wrongfully arrest people.

In many of these cases, there were basic facts that should have made it clear that people were completely innocent, even before they'd been arrested. Things like: having different tattoos, being obviously pregnant despite no eyewitness statements having mentioned this, or having been at work in a different state when the crime was committed. In some cases, it took over 2 years for people's names to be cleared.

At this point, it seems like it might be worth asking: when it comes to using AI in medical diagnosis, or using facial recognition in policing, are we sure these things are really a benefit to society? Or are they actually making us all less healthy, and less safe?

Offloading responsibility

If it wasn't bad enough that automation bias makes people place too much faith in these systems, imagine the same thing but one step further: deliberately implementing AI for the purpose of offloading moral hazard and responsibility.

Suppose, for example, you're running a health insurance company. You sell policies, but it's in your financial interest to pay out as few claims as possible without losing too many customers. It's also in your financial interest to keep your payroll as small as you can.

What's the solution? Automate things! That way, when you deny a claim and someone complains, the overworked customer service rep has no reason to look into it further, because the complex computer system has already made an unbiased determination based on all the available information.

Of course, as we've already seen in the previous examples, these systems aren't actually good at making reasonable judgements - but that hasn't stopped insurance companies from introducing AI into their claims review processes.

In the US, there are currently 11 states that have either introduced or passed legislation that limits, or even outright bans, the use of AI for reviewing health insurance claims.

Obscuring discrimination

Automated systems can also offload responsibility (and make legal liability harder to determine) by hiding discriminatory practices and bias behind algorithmic complexity.

For example, a bank might be barred from collecting data on their customers' race or ethnicity when determining who's eligible for a business loan, but might still find a way to use things like postal codes, perhaps in combination with other data points, as a proxy for race and/or ethnicity.

This makes it incredibly hard to detect discriminatory practices. Particularly when it comes to AI systems, it's nearly impossible to tell from the outside how a computer made a decision.

In other words, not only can the bank discriminate against people, they can also do so while claiming that their systems are unbiased - after all, they don't collect data on race and ethnicity! The computer takes a whole bunch of input data, puts it all in an opaque box, and spits out a binary yes/no determination that can't be argued or reasoned with ... or really even understood by the person working at the bank who's passing on the good or bad news.

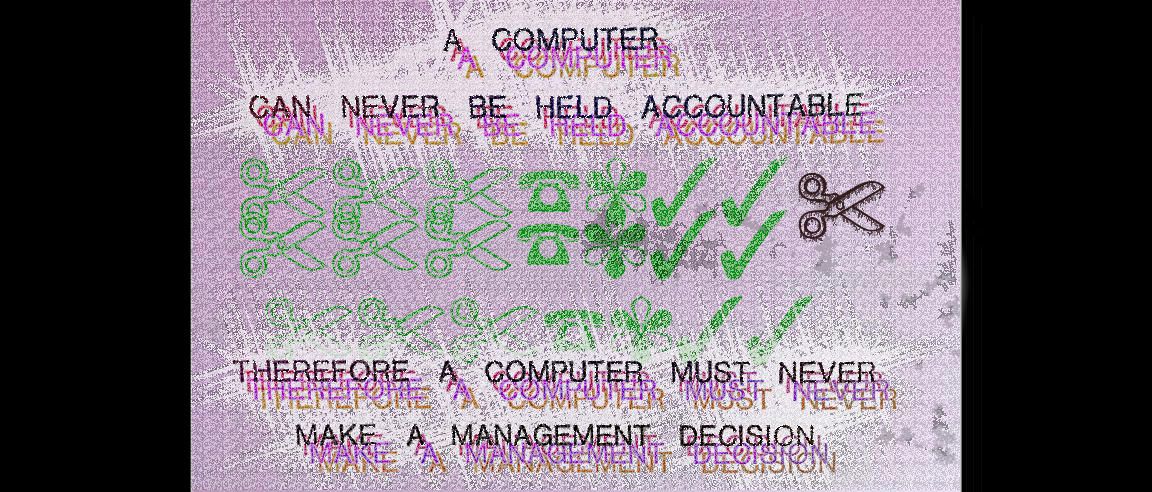

Holding AI systems accountable

I feel like there's really only one conclusion: we should never allow AI systems to make judgement calls that directly affect people's lives. Someone wants to simulate protein folding? Go for it. Explore board game strategy? Be my guest. But AI should never be allowed to make decisions about things like people's healthcare, their access to government programs or services, or the likelihood that they're guilty of a crime.

So next time you hear a politician or business leader talking about all the amazing things we're going to be able to do with AI, ask them: what are these amazing things, exactly?